I have written numerous times about how much I like Google's App Engine platform and about how I've tried to port as many of my applications to it as possible. The idea of App Engine is still glorious, but I have now been converted to Cloud Run.

The primary selling point? It has literally reduced my application's bill to $0. That's a 100% reduction in cost from App Engine to Cloud Run. It's absolutely wild, and it's now my new favorite thing.

Pour one out for App Engine because Cloud Run is here to stay.

Aside: a (brief) history of App Engine

Before I explain how Cloud Run manages to make my application free to run, I need to first run you through a brief history of App Engine, why it was great, why it became less great, and how Cloud Run could eat its lunch like that.

App Engine v1

Google App Engine entered the market in 2011 as a one-stop solution to host infinitely scalable applications. Prior to App Engine, if you wanted to host a web application, you had to either commit to a weird framework and go with that framework's cloud solution, or you had to spin up a bunch of virtual machines (or god help you Lambda functions) to handle your web traffic, your database, your file systems, maybe tie into a CDN, and also set up Let's Encrypt or something similar to handle your TLS certificates.

From the perspective of an application owner or application developer, that's a lot of work that doesn't directly deal with application stuff and ends up just wasting tons of everyone's time.

App Engine was Google's answer to that, something that we would now call "serverless".

The premise was simple: upload a zip file with your application, some YAML files with some metadata (concerning scaling, performance, etc.), and App Engine would take care of all the annoying IT stuff for you. In fact, they tried to hide as much of it from you as possible—they wanted you to focus on the application logic, not the nitty gritty backend details.

(This allowed Google to do all kinds of tricks in the background to make things better and faster, since the implementation details of their infrastructure were generally out of bounds for your application.)

App Engine's killer feature was that it bundled a whole bunch of services together, things that you'd normally have to set up yourself and pay extra for. This included, but was not limited to:

- The web hosting basics:

- File hosting

- A CDN service

- An execution environment in your language of choice (Java, Python, PHP, etc.)

- A cheap No-SQL database to store all your data

- A memcached memory store

- A scheduler that'll hit any of your web endpoints whenever you want

- A task queue for background tasks

- A service that would accept e-mail and transform it into an HTTP POST request

All of this came with a generous free tier (truly free) where you could try stuff out and generally host small applications with no cost at all. If your application were to run up against the free-tier limits, it would just halt those services until the next day when all of the counters reset and your daily limits restarted.

(Did I mention e-mail? I had so many use cases that dealt with accepting e-mail from other systems and taking some action. This was a godsend.)

The free tier gave you 28 hours of instance time (that is, 28 hours of a single instance of the lowest class). For trying stuff out, that was plenty, especially when your application only had a few users and went unused for hours at a time.

(Yes, if you upgraded your instance type one notch that became 14 hours free, but still.)

To use all of the bonus features, you generally had to link against (more or less) the App Engine SDK, which took care of all of the details behind the scene.

This was everything that you needed to build a scalable web application, and it did its job well.

App Engine v2

Years went by, and Google made some big changes to App Engine. To me, these changes went against the great promise of App Engine, but they make sense when looking at Cloud Run as the App Engine successor.

App Engine v2 added support for newer versions of the languages that App Engine v1 had supported (which was good), but it also took away a lot of the one-stop-shop power (which was bad). The stated reason was to remove a lot of the Google Cloud-specific stuff and make the platform a bit more open and compatible with other clouds.

(While that's generally good, the magic of App Engine was that everything was built in, for free.)

Now, an App Engine application could no longer have free memcached data; instead, you could pay for a Memory Store option. For large applications, it probably didn't matter, but for small ones that cost basically nothing to run, this made memcached untenable.

Similarly, the e-mail service was discontinued, and you were encouraged to move to Twilio SendGrid, which could do something similar. Google was nice enough to give all SendGrid customers a free tier that just about made up for what was lost. The big problem was that all of the built-in tools were gone. Previously, the App Engine local development console had a place where you could type in an e-mail and send it to your application; now you had to write your own tools.

The scheduler and task queue systems were promoted out of App Engine and into Google Cloud, but the App Engine tooling continued to support them, so they required only minimal changes on the application side.

The cheap No-SQL database, Datastore, was also promoted out of App Engine, and there were no changes to make whatsoever. Yay.

The App Engine SDK was removed, and an App Engine application was simply another application in the cloud, with annoying dependencies and third-party integrations.

(Yes, technically Google added back support for the App Engine SDK years later, but meh.)

Overall, for people who used App Engine exclusively for purpose-built App Engine things, v2 was a major step down from v1. Yes, it moved App Engine toward a more standards-based approach, but the problem was that there weren't standards around half the stuff that App Engine was doing, and in the push to make it more open and in-line with other clouds, it lost a lot of its power (because other clouds weren't doing what App Engine was doing, which is why I was using Google's App Engine).

So, why Cloud Run?

Cloud Run picks up where App Engine v2 left off. With all of the App Engine-specific things removed, the next logical jump was to give Google a container image instead of a zip file and let it do its thing. App Engine had already experimented with this with the "Flexible" environment, but its pricing was not favorable compared to the "Standard" offering for the kinds of projects that I had.

From a build perspective, Cloud Run gives you a bit more flexibility than App Engine. You can give Google a Dockerfile and have it take care of all the compiling and such, or you can do most of that locally and give Google a much simpler Dockerfile with your pre-compiled application files. I don't want to get too deep into it, but you have options.

Cloud Run obviously runs your application as a container. Did App Engine? Maybe? Maybe not? I figured that it would have, but that's for Google to decide (the magic of App Engine).

But here's the thing: App Engine was billed as an instance, not a container.

Billing: App Engine vs. Cloud Run

I'm going to focus on the big thing, which is instance versus container billing. Everything else is comparable (data traffic, etc.).

App Engine billed you on instance time (wall time), normalized to units of the smallest instance class that they support. To run your application at all, there was a 15-minute minimum cost. If your application wasn't doing anything for 15 minutes, then App Engine would shut it down and you wouldn't be billed for that time. But for all other time, anytime that there was at least one instance running, you were billed for that instance (more or less as if you had spun up a VM yourself for the time that it was up).

For a lightweight web application, this kind of billing isn't ideal because most of the work that your application does is a little bit of processing centered around database calls. Most of the time the application is either not doing anything at all (no requests are coming in) or it's waiting on a response from a database. The majority of the instance CPU goes unused.

Cloud Run bills on CPU time, that is, the time that the CPU is actually used. So, for that same application, if it's sitting around doing nothing or waiting on a database response, then it's not being billed. And there's no 15-minute minimum or anything. For example, if your application does some request parsing, permissions checks, etc., and then sits around waits for 1 second for a database query to return, then you'll be billed for like 0.0001 seconds of CPU time, which is great (because your application did very little actual CPU work in that second it took for the request to complete).

Cloud Run gives you basically 48 hours of "vCPU-seconds" (that is, a single CPU churning at 100%) per month. So for an application that sits around doing nothing most of the time, the odds are that you'll never have to pay for any CPU utilization. For a daily average, this comes out to about 1.5 hours of CPU time free per day. Yes, you also pay for the amount of memory that your app uses while it's handling requests, but my app uses like 70MB of memory because it's a web application.

(For me, my app has a nightly jobs that eats up a bunch of CPU, and then it does just about nothing all day.)

Overall, for me, this is what moved me to Cloud Run. I'm billed for the resources that I'm actually using when I'm actually using them, and the specifics around instances and such are no longer my concern.

This also means that I can use a more microservice-based approach, since I'm billed on what my application does, not what resources Google spins up in the background to support it. With App Engine, running separate "services" would have been too costly (each with its own instances, etc.). With Cloud Run, it's perfect, and I can experiment without needing to worry about massive cost changes.

What's the catch?

App Engine's deployment tools are top-notch, even in App Engine v2. When you deploy your application (once you figure out all the right flags), it'll get your application up and running, deploy your cron jobs, and ensure that your Datastore indexes are pushed.

With Cloud Run, you don't get any of the extra stuff.

I've put together a list of things that you'll have to do to migrate your application from App Engine to Cloud Run, sorted from easiest to hardest.

Easy: Deploy your application

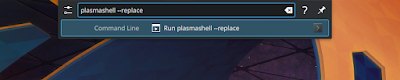

This is essentially gcloud run deploy with some options, but it's extremely simple and straightforward. Migrating from App Engine will take like 2 seconds.

Easy: Get rid of app.yaml

You won't need app.yaml anymore, so remove it. If you had environment variables in there, move them to their own file and update your gcloud run deploy command to include --env-vars-file.

In Cloud Run, everything is HTTPS, so you don't have to worry about insecure HTTP endpoints.

There are no "warmup" endpoints or anything, so just make sure that your container does what it needs to when it starts because as soon as it can receive an HTTP request, it will.

If you had configured a custom instance class, you may need to tweak your Cloud Run service with parameters that better match your old settings. I had only used an F2 class because the F1 class had a limitation on the number of concurrent requests that it could receive, and Cloud Run has no such limitation. You can also configure the maximum number of concurrent requests much, much higher.

Similarly, all of the scaling parameters are... different, and you can deal with those in Cloud Run as necessary. Let your app run for a while and see how Cloud Run behaves; it's definitely a different game from App Engine.

Easy: Deploy your task queues

Starting with App Engine v2, you already had to deploy your own task queues (App Engine v1 used to take care of that for you), so you don't have to change a single thing with deployment. Note that you will have to change how those task queues are used; see below.

Easy: Deploy your Datastore indexes

This is essentially gcloud datastore indexes create index.yaml with some options, and you should already have index.yaml sitting around, so just add this to your deployment code.

Easy: Rip out all "/_ah" endpoints

App Engine liked to have you put special App Engine things under "/_ah", so I stashed all of my cron job and task queue endpoints in there. Ordinarily, this would be fine, except that Cloud Run quietly treats "/_ah" as special and will quietly fail all requests to any endpoints under it with a permission-denied error. I wasted way too much time trying to figure out what was going on before I realized that it was Cloud Run doing something sneaky and undocumented in the background.

Move any endpoints under "/_ah" to literally any other path and update any references to them.

Easy: Create a Dockerfile

Create a Dockerfile that's as simple or as complex as you want. I wanted a minimal set of changes from my current workflow, so I had my Dockerfile just include all for binaries that get compiled locally as well as any static files.

If you want, you can set up a multi-stage Dockerfile that has separate, short-lived containers for building and compiling that ultimate ends up with a single, small container for your application. I'll eventually get there, but actually deploying into Cloud Run took precedence for me over neat CI/CD stuff.

Easy: Detect Cloud Run in production

I have some simple code that detects if it's in Google Cloud or not so that it does smart production-related things if it is. In particular, logging in production is JSON-based so that StackDriver can pick it up properly.

The old "GAE_*" and "GOOGLE_*" environment variables are gone; you'll only get "K_SERVICE" and friends to tell you a tiny amount of information about your Cloud Run environment. You'll basically get back the service name and that's it.

If you want your project ID or region, you'll have to pull those from the "computeMetadata/v1" endpoints. It's super easy; it's just something that you'll have to do.

For more information on using the "computeMetadata/v1" endpoints, see this guide.

For more information about the environment variables available in Cloud Run, see this document.

Easy: "Unlink" your Datastore

Way back in the day, Datastore was merely a part of App Engine. At some point, Google spun it out into its own thing, but for older projects, it's still tied to App Engine. Make sure that you go to your Datastore's admin screen in Google Cloud Console and unlink it from your App Engine project.

This has no practical effects other than:

- You can no longer put your Datastore in read-only mode.

- You can disable your App Engine project without also disabling your Datastore.

It may take 10-20 minutes for the operation to complete, so just plan on doing this early so it doesn't get in your way.

To learn more about unlinking Datastore from App Engine, see this article.

Medium: Change your task queue code

If you were using App Engine for task queues, then you were using special App Engine tasks. Since you're not using App Engine anymore, you have to use the more generic HTTP tasks.

In order to do this, you'll need to know the URL for your Cloud Run service. You can technically cheat and use the information in the HTTP request to generally get a URL that (should) work, but I opted to use the "${region}-run.googleapis.com/apis/serving.knative.dev/v1/namespaces/${project-id}/services/${service-name}" endpoint, which returns the Cloud Run URL, among other things. I grabbed that value when my container started and used it anytime I had to create a task.

(And yes, you'll need to get an access token from another "computeMetadata/v1" endpoint in order to access that API.)

For more information on that endpoint, see this reference.

Once you have the Cloud Run URL, the main difference between an App Engine task and an HTTP task is that App Engine tasks only have the path while the HTTP task has the full URL to hit. I had to change up my internals a bit so that the application knew its base URL, but it wasn't much work.

To learn more about HTTP tasks, see this document.

Medium: Build a custom tool to deploy cron jobs

App Engine used to take care of all the cron job stuff for you, but Cloud Run does not. At the time of this writing, Cloud Run has a beta command that'll create/update cron jobs from a YAML file, but it's not GA yet.

I built a simple tool that calls gcloud jobs list and parses the JSON output and compares that to a custom YAML file that I have that describes my cron jobs. Because cron jobs do not refer to an App Engine project, they can reference any HTTP endpoint or a particular Cloud Run service. My YAML file has an option for the Cloud Run service name, and my tool looks up the Cloud Run URL for that service and appends the path onto it.

It's not a lot of work, but it's work that I had to do in order to make my workflows make sense again.

Also note that the human-readable cron syntax ("every 24 hours") is gone and you'll need to use the standard "minute hour day-of-month month day-of-week" syntax that you're used to in most other cron-related things. You can also specify which time zone each cron job should use for interpreting that syntax.

To learn more about the cron syntax, see this document.

Medium: Update your custom domains

At minimum, you will now have a completely different URL for your application. If you're using custom domains, you can use Cloud Run's custom domains much like you would use App Engine's custom domains.

However, as far as Google is concerned, this is basically removing a custom domain from App Engine and creating a custom domain in Cloud Run. This means that it'll take anywhere from 20-40 minutes for HTTPS traffic to your domain to work after you make the switch.

Try to schedule this for when you have minimal traffic. As far as I can tell, there's nothing that you can do to speed it up. You may wish to consider using a load balancer instead (and giving the load balancer the TLS certificate responsibilities), but I didn't want to pay extra for a service that's basically free and only hurts me when I need to make domain changes.

Medium: Enable compression

Because App Engine ran behind Google's CDN, compression was handled by default. Cloud Run does no such thing, so if you want compression, you'll have to do it yourself. (Yes, you could pay for a load balancer, but that doesn't reduce your costs by 100%.) Most HTTP frameworks have an option for it, and if you want to roll your own, it's fairly straightforward.

I ended up going with https://github.com/NYTimes/gziphandler. You can configure a minimum response size before compressing, and if you don't want to compress everything (for example, image files are already compressed internally these days), you have to provide it with a list of MIME types that should be compressed.

Hard: Enable caching

Because App Engine ran behind Google's CDN, caching was handled by default. If you set a Cache-Control header, the CDN would take care of doing the job of an HTTP cache. If you did it right, you could reduce the traffic to your application significantly. Cloud Run does no such thing, and your two practical options are (1) pay for a load balancer (which does not reduce your costs by 100%) or (2) roll your own in some way.

There's nothing stopping you from dropping in a Cloud Run service that is basically an HTTP cache that sits in front of your actual service, but I didn't go that route. I decided to take advantage of the fact that my application knew more about caching than an external cache ever could.

For example, my application knows that its static files can be cached forever because the moment that I replace the service with a new version, the cache will die with it and a new cache will be created. For an external cache, you can't tell it, "hey, cache this until the backing service randomly goes away", so you have to set practical cache durations such as "10 minutes" (so that when you do make application changes, you can be reasonably assured that they'll get to your users fairly quickly).

I ended up writing an HTTP handler that parsed the Cache-Control header, cached things that needed to be cached, supported some extensions for the things that my application could know (such as "cache this file forever), and served those results, so my main application code could still generally operate exactly as if there were a real HTTP cache in front of it.

Extra: Consider using "alpine" instead of "scratch" for Docker

I use statically compiled binaries (written in Go) for all my stuff, and I wasted a lot of time using "scratch" as the basis for my new Cloud Run Dockerfile.

The tl;dr here is that the Google libraries really, really want to validate the certificates of the Google APIs, and "scratch" does not install any CA certificates or anything. I was getting nasty errors about things not working, and it took me a long time to realize that Google's packages were upset about not being able to validate the certificates in their HTTPS calls.

If you're using "alpine", just add apk add --no-cache ca-certificates to your build.

Also, if you do any kind of time zone work (all of my application's users and such have a time zone, for example), you'll also want to include the timezone data (otherwise, your date/time calls will fail).

If you're using "alpine", just add apk add --no-cache tzdata to your build. Also don't forget to set the system timezone to UTC via echo UTC > /etc/timezone.

Extra: You may need your project ID and region for tooling

Once running, your application can trivially find out what its project ID and region are from the "computeMetadata/v1" APIs, but I found that I needed to know these things in advance while running my deployment tools. Your case may differ from mine, but I needed these in advance.

I have multiple projects (for example, a production one and a staging one), and my tooling needed to know the project ID and region for whatever project it was building/deploying. I added this to a config file that I could trivially replace with a copy from a directory of such config files. I just wanted to mention that you might run into something similar.

Extra: Don't forget to disable App Engine

Once everything looks good, disable App Engine in your project. Make sure that you unlinked your Datastore first, otherwise you'll also disable that when you disable App Engine.

Extra: Wait a month before celebrating

While App Engine's quotas reset every day, Cloud Run's reset every month. This means that you won't necessarily be able to prove out your cost savings until you have spent an entire month running in Cloud Run.

After a few days, you should be able to eyeball the data and check the billing reports to get a sense of what your final costs will be, but remember: you get 48 hours of free CPU time before they start charging you, and if your application is CPU heavy, it might take a few days or weeks to burn through those free hours. Even after going through the free tier, the cost of subsequent usage is quite reasonable. However, the point is that you can't draw a trend line on costs based on the first few days of running. Give it a full month to be sure.

Extra: Consider optimizing your code for CPU consumption

In an instance world like App Engine, as long as your application isn't using up all of the CPU and forcing App Engine to spawn more instances or otherwise have requests queue up, CPU performance isn't all that important. Ultimately, it'll cost you the same regardless of whether you can optimize your code to shave off 20% of its CPU consumption.

With Cloud Run, you're charged, in essence, by CPU cycle, so you can drastically reduce your costs by optimizing your code. And if you can keep your stuff efficient enough to stay within the free tier, then your application is basically free. And that's wild.

If you're new to CPU optimization or haven't done it in a while, consider enabling something like "pprof" on your application and then capturing a 30 second workload. You can view the results with a tool like KCachegrind to get a feel for where your application is spending most of its CPU time and see what you can do about cleaning that up. Maybe it's optimizing some for loops; maybe it's caching; maybe it's using a slightly more efficient data structure for the work that you're doing. Whatever it is, find it and reduce it. (And start with the biggest things first.)

Conclusion

Welcome to Cloud Run! I hope your cost savings are as good as mine.

Edit (2022-10-19): added caveats about HTTP compression and caching.